MY PROJECTS

COMPUTER VISION

Bounding Box Object Detection

Experience real-time object detection with this computer vision project. Using OpenCV's powerful functions, I've crafted a system that creates dynamic bounding boxes around specific objects captured by my webcam. In this unique application, the bounding box comes to life whenever a yellow object is detected in the camera's field of view.

Key Features:

Color-based Detection: The algorithm uses advanced color detection techniques, utilizing hue, saturation, and value parameters, to identify the presence of yellow objects.

OpenCV Integration: Leveraging OpenCV's VideoCapture for webcam access, cvtColor for efficient color space conversion (BGR to HSV), and inRange for precise color range identification.

Bounding Box Precision: I've implemented the PIL library's getbbox function to determine the exact coordinates of the smallest rectangle encapsulating the detected yellow object.

Click Here to Access Project

Object Tracking and Recognition with YOLOv8

Click Here to Access Project

Project Overview: This computer vision project focuses on real-time object tracking and recognition in video streams. Leveraging the power of the YOLOv8 model from the Ultralytics library, the project seamlessly captures video frames, processes each frame through the YOLO object detection model, and intelligently tracks objects using bounding boxes. The result is a dynamic and interactive system that can detect and identify objects within a video, providing valuable insights into visual data.

Technologies Used:

-

Ultralytics Library

-

YOLOv8 Object Detection Model

-

OpenCV for Video Processing

Results and Impact: The project successfully delivers real-time object tracking and recognition capabilities in videos. With bounding boxes highlighting detected objects, users can easily visualize and interpret the contents of a video stream. This project lays the foundation for applications in surveillance, automated monitoring, and various domains where understanding video content is essential.

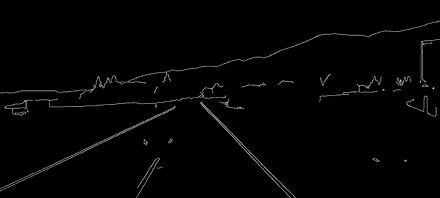

Lane Detection and Tracking in Video using Computer Vision

In this project, I developed a lane detection system using OpenCV and computer vision techniques to identify and track lane markings in video footage. The goal was to create an automated system that could process road videos and detect lane boundaries in real time, a critical feature for autonomous vehicle systems.

Key components of the project:

-

Canny Edge Detection: I used the Canny edge detection algorithm to extract the most prominent edges in the video frames, focusing on lane markings and road boundaries.

-

Region of Interest (ROI): To optimize the detection process, I applied a region of interest (ROI) filter to focus on the area where the lanes are most likely to appear, reducing the impact of irrelevant background details.

-

Hough Line Transform: The Hough Line Transform was used to identify straight lines in the processed image, representing the lanes in the video footage.

-

Line Averaging: By implementing slope and intercept averaging techniques, I was able to smooth the lane detection process and eliminate noise in the results, ensuring the lane boundaries remained consistent throughout the video.

-

Real-time Processing: The system processes video frames in real time, displaying the lane markings superimposed on the original video to give immediate feedback on lane tracking.

Click Here to Access Project

The Future Force

Conscious Entity